Artificial Intelligence and Models

João Paulo Carvalho, May 10, 2019

In the present Spring of Artificial Intelligence (AI), an area of knowledge has been forgotten. It is a relevant area, without which AI cannot progress in areas such as social sciences, urbanism, health (beyond prognosis), or software development. We are talking about complex knowledge representation.

The current boom of Artificial Intelligence

2019 has been a year of great enthusiasm for Artificial Intelligence in Portugal, one of the most dynamic innovation ecosystems in Europe. The excellent “Brain” exhibition at Gulbenkian Foundation, from May to June, was visited by tens of thousands of people. Artificial Intelligence was the theme of a Fidelidade-Culturgest conference cycle, with IST’s scientific support, spread across three sessions in the second quarter of the year. Vodafone organized its Business Conference at Alfândega do Porto on May 8th on the new era of Artificial Intelligence.

Partnering with IST, Quidgest has already organized two AI+ MDE Talks. Portuguese technology companies, like Unbabel, Talkdesk, Opentalk, DefinedCrowd, and Feedzai, are being praised by the media for their innovative use of artificial intelligence. Researchers have come out of relative anonymity and are being recognized by the general public: Pedro Domingos, Ana Paiva, Luisa Coheur, Mario Figueiredo, Arlindo Oliveira, Luis Moniz Pereira, Virginia Dignum, Manuela Veloso. Throughout the year, AI has mobilized researchers, students, investors, decision-makers, and entrepreneurs, but also a growing number of curious people who just want to know about the subject.

Ups and Downs of Artificial Intelligence throughout its history

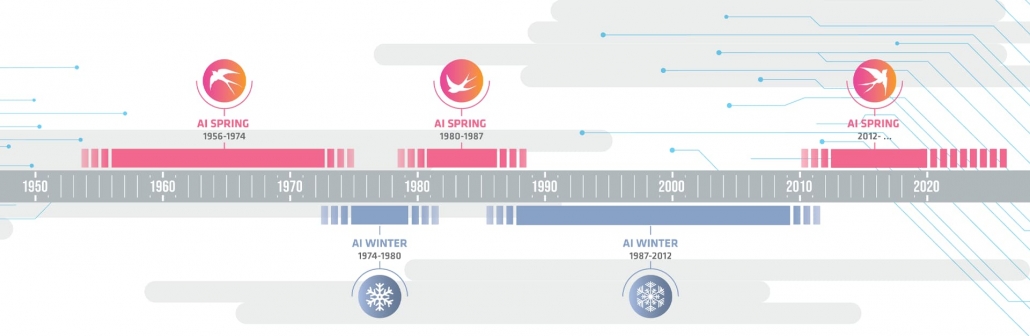

But this strong enthusiasm for artificial intelligence is not new, and it might not be here to last. Throughout the years, we have seen several Springs (booms) of Artificial Intelligence, and each of these booms has been followed by a depressing Winter.

In the present Spring of AI, both the prospect of its failure followed by the disappointment of a new Winter and the possibility of being an extraordinary success (ultimately implying the mastery of machines over human intelligence), generate fear and concern. At Quidgest, we want to move away from discussing this latter scenario.

It implies a move from Narrow AI (AI to solve specific problems) to Generic AI (AI to solve any issues a human can solve), and finally to Super AI (AI that reproduces itself without human intervention). However, neither Generic nor Super AI are around the corner. Therefore, we think that, by discussing this threat, we would only be contributing to ill-grounded ideas of dystopias, not supported by intelligent thinking.

In practice, specific AI (Narrow AI) is the type of AI we all work with today. It is the debate around this Narrow AI that we were committed to improving, mainly because it can support the digital transformation of our productive structures and our society. To this end, we are also committed to the 2030 sustainable development goals and are closely following the UN “AI for Good” conferences.

What has happened in the last 25 years?

After the last Spring, Artificial Intelligence hibernated and was asleep until about five years ago. Then, suddenly, it started to be used in diverse domains such as autonomous driving, social networking recommendations, suggestions, e-commerce platforms, medical diagnostics, facial recognition, path optimization, fraud detection, virtual assistance, narrative generation, or natural language processing.

Most of the AI concepts used today are not new. However, advances in speed and computing power are the big difference between AI in the 2010s and the 1980s (the date of the last Spring). Additionally, in a successful take-over operation, AI has embraced data science and statistics, and quantitative methods, forgetting that these areas have centuries of research before the emergence of AI (research mostly ignored by current AI).

While benefiting from all the technological advances of previous decades, the new generation of artificial intelligence researchers might be, contrary to the previous generation, overlooking some challenges, some concerns, and some relevant domains of knowledge. As already mentioned above, we aim to analyze why the representation of complex knowledge, in particular, has been overlooked.

Knowledge is represented by models. The model is a simplified, independent, and valuable conception of reality. Through rules, with its own assumptions and semantics, it translates what is known about this reality. It can be continuously extended or refined, namely by incorporating new standards. It can represent different aspects of reality differently, to study them more easily. It can be evaluated. It can be tested. It allows simulations, anticipating behaviors, or predicting developments.

Pedro Domingos, in his bestselling book “The Master Algorithm”, “a conceptual model of machine learning”, notes that the expression “conceptual model” was defined by the psychologist Don Norman: ”A conceptual model is an explanation, usually highly simplified, of how something works.” However, in this learning-focused and algorithm-centered AI generation, models are missing or underrepresented.

What happened to Complex Knowledge Representation?

In previous AI research and investment periods, this Complex Knowledge Representation was referred to as Expert Systems. A simple look at Google Trends shows us that fifteen years ago (the internet has a short memory L), Expert Systems and Machine Learning (ML) held similar positions in search trends, and both these terms were in a downward trend until 2011. We can see that until 2014, interest in these terms remained below that of ten years earlier. However, after 2014, these two terms evolved very differently, with searches for ML soaring, while Expert Systems became less and less relevant. Searches for ML currently beat those for Expert System by 100 to 1, owing to an exponential growth in interest for ML. And this difference is guaranteed to increase even more.

The Model and not just the Algorithm

We are not trying to resurrect Expert Systems. They seem doomed to exist only in one place: history. But we do want to highlight the need to track the progress of Artificial Intelligence with the representation of complex knowledge through models. To focus on the Model and not just the Algorithm.

In the evolution of knowledge, a Model is the formulation of the problem. It is the thesis, the rules of the game, or the question posed. In comparison, an Algorithm is a way to solve that problem or win that game.

Model and algorithm are two interconnected and not opposing concepts. Intelligence is both the creation of the model (the invention of chess) and the discovery of the algorithm (like being a chess champion). However, the current wave of artificial intelligence is much more focused on the algorithm than on the model. Quidgest cannot agree with this partial view.

Limitations of current Artificial Intelligence compared to the previous generation

There are three severe limitations to the recent AI wave when compared to the previous one, three decades ago:

- complex knowledge modeling has not evolved

- logical inference and rule-based knowledge has been ignored

- there is a focus on problems with simple answers (dichotomous, rankings, actions limited to a few options).

The absence of explicit knowledge

Present AI considers the explicitness of knowledge unnecessary. When a computer discovers something that we thought only humans could discover (or that even humans could not find), we marvel at the fact that the machine has made such a discovery. In reality, there is a model even when there doesn’t appear to be a model. It is “the model of the absence of a model”, which is a dangerous option. The Model is unstated, thoughtless, unevaluated, and based on stereotypes. Why are outliers removed in statistical inference? Why are all virtual assistants female?

The mandatory probabilistic inference

Because of the relevance it gave to Machine Learning, current AI is probabilistic. However, human intelligence is probabilistic only when it needs to be probabilistic. Logical inference, which we have known and worked with since the syllogisms of ancient Greek philosophers, is as valid as, or more accurate than, statistical inference. And yet, for the automatic generation of complex information systems, current AI has forgotten and does not use:

- logic programming (for instance, with Prolog);

- the representation of knowledge based on declarative rules;

- or inference engines such as Velocity (which Quidgest uses).

Besides, the non-clarification of the inference rules results in a serious problem, which we will discuss later: opacity and non-transparency of decisions.

Too few outcome options

Today’s AI deals with problems that can be very complex but it is focused on simple outcomes within a limited range of options. What the current AI gives us are dichotomous answers (yes/no; order/don’t order; dog/cat), rankings (a number on a scale, a group to belong to), or limited movements (make this move).

Autonomous driving is no exception. Driving a car means moving forward or backward, speeding up or slowing down, turning right or left. That means a small number of options. However, deciding how to reach a specific place, at a determined time, with a defined driving style, and given the traffic situation, are all actions with a model behind them and not just machine learning.

Having a conversation or writing a report also seems to require more than a simple answer. But conversations with today’s virtual assistants are sequences without a conducting wire. We are not saying that virtual assistants will not achieve the goal of building a fluent conversation in the future, but they will need a model to do so. In fact, it is possible that this Model already exists, but that AI is ashamed to recognize it and only gives credit to the intelligence of the virtual assistant. Buying movie tickets, booking a restaurant near the cinema, finding transportation. These do not constitute very elaborated knowledge, but there is a model, a logical sequence, to support them.

Whether due to inherent difficulty or to purism, anything that requires more than a simple decision, or a sequence of simple decisions, is not currently considered AI. In fact, in the several conferences we attended, we have seen that software programming is not considered part of today’s Artificial Intelligence applications. Quidgest intends to change this mindset.

The AI Wall

Walls are not useful for AI, nor for innovation in general. There is a lot of bullying in the current AI wave, in terms of what constitutes, or not, AI: “this is AI”/“this is not AI”. This issue is not new, and there is a well-known quote that “once something is released and becomes commonplace, it is no longer classified as artificial intelligence”. Getting a computer to write very complex information systems, as we do at Quidgest, even with quality and (of course) much faster speed than a team of various programmers, struggles to be considered AI. After all, it is not among the “official disciplines” of current AI: it is not ML, data science, and natural language processing. It will be, at best, robotics.

Today’s AI too often applies the epithet of “unintelligent”. Even if it beats the world champion, a chess program is “unintelligent” because it is specific. However, it is recognized that all AI investigated today is specific (it is Narrow AI) and not generic, so why is there a difference?

To put a computer to write very complex information systems, just as Quidgest does, even if with quality and (naturally) much higher speed than a team of several programmers, has to fight to be considered AI. After all, it is not among the official disciplines of current AI. It is not ML; it is not data classification; it is not natural language processing. It will be, at best, robotics.

Neuronal processes are older than brains

Not that we argue otherwise. We don’t ignore the value of neural networks and machine learning, just for the sake of the “old school”. Science is discovering that there are other brains. That there are brainless living things that follow intelligent strategies. That there is a recognition of the collective intelligence of an anthill or swarm, or even of a city or a living language. If the human brain is not the only Model of intelligence, the processes that evolution has turned into neuronal processes are older than we can imagine.

In his book “The Strange Order of Things,” Antonio Damásio speaks of the enormous success of bacteria: “I ask for a moment the reader to forget the human mind and brain and think instead of the life of bacteria. Bacteria are very intelligent creatures, there is no other way to say it, even if this intelligence is not guided by a mind with feelings and intentions and a conscious point of view. They feel the conditions of the environment and react in advantageous ways for the continuation of their lives. These reactions include complex social behaviors. They communicate among themselves – without words, it is true, but the molecules with which they exchange messages are worth a thousand words. Their calculations allow them to assess their situation and live independently or come together, whichever is best. These unicellular organisms have neither the nervous system nor mind in the same sense as we have them. Nevertheless, they have varieties of perception, memory, communication, and social orientation that are more than adequate to their needs. The functional operations that underpin all this “brainless mindless intelligence” depend on chemical and electrical networks of the kind that nervous systems came to have, develop and exploit later in the evolution.“

Software and AI opacity

At FINLAB, an initiative promoted by the three financial regulators in Portugal – Banco de Portugal, CMVM, and ASF – Quidgest was invited to demonstrate what it has been doing with artificial intelligence in the field of financial regulation. The example we presented was using a neuronal network to reduce the administrative cost of false positives in detecting money-laundering operations. FINLAB unanimously required the reasoning behind the decision to be explained. Either the neural networks evolve towards knowing how to justify their conclusions, or regulators will not accept it. Which makes complete sense. AI (including ML) should improve to include, by design, both the evidence of reasoning and the degree of confidence in the outcome.

Those familiar with Quidgest’s human resources management systems know that, from their inception, one of the system’s essential functions, payroll processing, is followed by a detailed explanation of the underlying calculations. This explanation is a solution differentiator, and it assures the required transparency. Systems developed by Quidgest are, by design, transparent.

The autocracy of the software is not desirable, but, within the present AI, this doesn’t seem to bother anyone. The problem, as Stuart Russel highlights, is value alignment, how to align the Algorithm with the objective function. Without a knowledge model, it is impossible to define the exact objectives. Russell reminds us of the story of King Midas. A clear and optimized formulation (“whatever I touch, I want to turn into gold”) that led to his starvation. When we define our goals with a limited representation of reality – not analyzed in all perspectives and all its consequences – this is what will happen. Algorithms that, for carrying out an objective, uncover mechanisms that we have not imagined are the new hands of Midas. And we are not making these algorithms accountable.

Training for Artificial Intelligence

The idea that AI means “learning algorithms not requiring knowledge models” is more dangerous because it is the one being passed to the new generation of researchers. Focusing only on intelligence and not on knowledge is naturally attractive to new researchers. As George Orwell said: “Every generation imagines itself to be more intelligent than the one that went before it, and wiser than the one that comes after it.” Research becomes easier, and the literature review takes less time when someone ignores all the challenges posed 30 or 60 years ago. And yet, as Jan Bosch says: “We found several cases where people went out and started to employ all kinds of great deep learning solutions and it turns out that basic simple statistical approaches, going back to hundred-plus years, actually were better than a deep learning algorithm”.

Real Dangers: The Boeing 737-Max MCAS Case

If the current Spring of AI fails to meet expectations, it will be because the Algorithm (the machine) has been given too much power, and the modeling (knowledge) has little strength. There is overconfidence in algorithmic AI today. Overconfidence killed 189 people on a Lion Air flight and another 157 on an Ethiopian Airlines flight in a few months. Both planes crashed for several linked reasons: the absence of variables in the Model (namely, ground clearance), reduced interaction with the surroundings (namely, with pilots), and lack of need to justify their decisions and actions.

Every bad thing we can expect from AI in the future was already present in these accidents (can we call it an accident when the Algorithm was perfectly executed?). And yet, the impacts (on Boeing’s learning and financial penalties) were limited to aviation. AI, as a whole, seems to have learned nothing from these fatalities. Perhaps it can even be said that the excitement about automation and artificial intelligence has somewhat subdued these effects. Of all the Artificial Intelligence conferences we have attended since March 2019, which ones have been hyped around this topic? How many speakers reviewed their argument in light of the two Boeing 737 Max MCAS disasters? Without proper models, Artificial Intelligence has all the conditions to result in Artificial Stupidity.

AI for complex software development

Despite being highly fashionable around the world, Artificial Intelligence has not been applied to the main technical problem of our time: that digital transformation requires a software development capability (including AI components) which currently does not exist.

The problem has led to the retraining of many non-developers; promoting brain importation; developing code academies; or proposing training for children at nurseries. But the AI-supported software development automation is not part of the plan. Not even at the academic level is this automation sufficiently studied. Academia is expected to show the applicability of a technique before the industry addresses all the additional problems of product engineering of that technique. However, there are only sporadic cases, in the academic world, where software development through any form of intelligent automation is a curricular subject or on which there are research, dissertations, or articles. The transition from academic AI to the industrial use of AI is an additional challenge. While academic research is done on a small scale, affecting few people through pilots and prototypes.

Modeling very complex information systems

Quidgest’s domain of research, which has remained the same since the last Spring of Artificial Intelligence, three decades ago, is the representation of complex management software through models and their automatic generation.

At Quidgest, this is already industrial research, with all the scalability, efficiency, reliability, and robustness that it implies. And it translates into Genio.

All systems developed by Quidgest and its partners, through Genio, are

- defined unambiguously and declaratively through a template, including various types of components (persistence, interaction, workflows, business rules, security, etc.) for which patterns have been identified;

- automatically generated, once the Model is validated, using inference.

Quidgest’s Genio has accumulated knowledge over the last thirty years and behaves like an expert in all fields required by the complex task of developing enterprise management software. Usability, integrity, performance, interaction, globalization, technological suitability, and even evolution ability aren’t secrets to Genio. In this process, Genio solutions (including ERP and core vertical systems) successfully compare with the equivalent products developed through traditional forms. In software development, AI is much more competent than conventional programming.