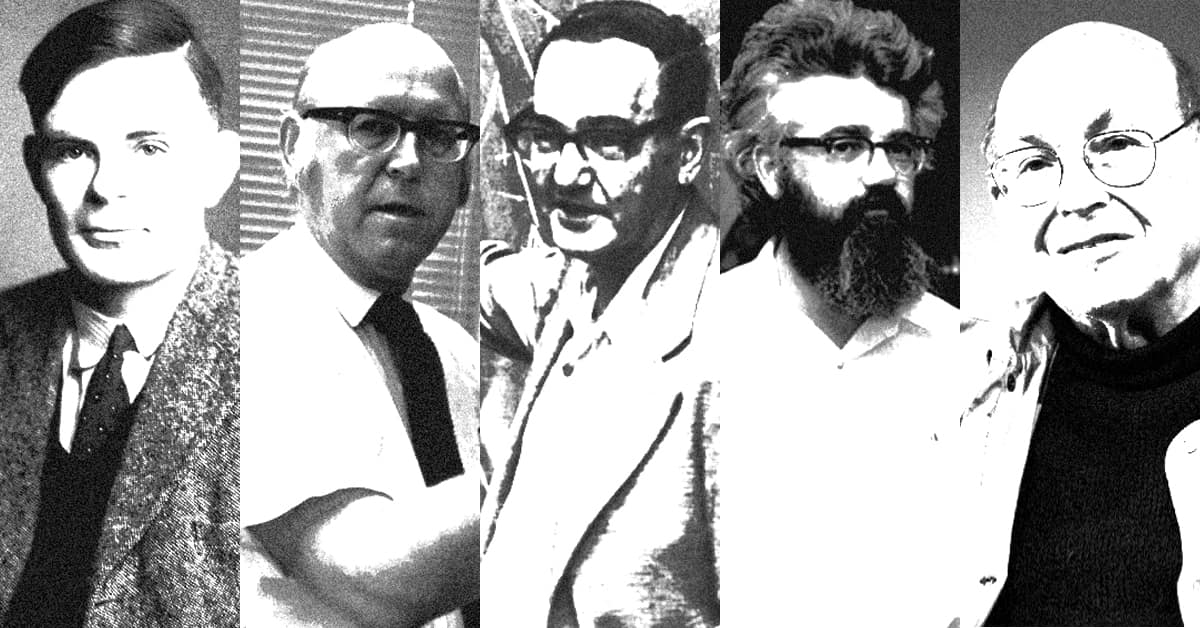

Founding fathers of Artificial Intelligence

João Simões Abreu, March 19, 2021

The 20th century was packed with geniuses who propelled us to heights never experienced before. The personal computer, the Internet, and the television encouraged knowledge exchange and made humanity dream of a science-fiction reality.

The last 100 years were also responsible for the rise of five prominent figures who set the cornerstones for developing one of the most impressive technologies we know so far: Artificial Intelligence (AI). Alan Turing, Allen Newell, Herbert A. Simon, John McCarthy, and Marvin Minsky are frequently considered the founding fathers of technology that have revolutionized countless industries.

Scroll through the following article to know more about the five man’s contributions that dreamed of endowing a machine with human intelligence.

Read more: What is Generative AI? – examples and numbers

Alan Turing (1912-1954)

The earliest significant work in the field of AI was done by Alan Turing. In 1935, the British logician and pioneer in the computation field described an abstract computing machine with infinite memory and a scanner that could move back and forth through the memory, reading what it finds and writing further symbols – the activities of the scanner were controlled by a program of instructions that was also stored in the memory in the form of symbols.

This concept, which is now known as the universal Turing machine, implies a constant self-modifying and self-improving machine and set the basis for every modern computer.

During World War II, Turing led the British Government to crack German codes and give the Allies an advantage over their fighting opponents. The British genius work was disrupted during the war years, and he was only able to resume his work when the war had finally come to an end in 1945.

However, amidst the chaos that undermined his work and research, he further developed his thoughts on machine intelligence. Donald Michie, Turing’s colleague in the Code and Cypher School during the war years and who, years later, founded Edinburgh University’s Department of Machine Intelligence and Perception, recalled that Turing frequently talked about how computers could learn from experience and solve new problems through the use of guiding principles – a procedure now known as Heuristics.

Just two years after the war, Turing gave what – most likely – became the first public lecture to mention computer intelligence. “What we want is a machine that can learn from experience,” and that the “possibility of letting the machine change its own instructions provides the mechanism for this,” reportedly said the then 36-year-old Turing to an audience in London.

Many of the ideas the British founding father introduced in “Intelligent Machinery”, a 1948 report which included many of the central concepts of AI, were later reinvented by other people. One of them was to train a network of artificial neurons to perform specific tasks.

The British genius is also known for developing a test that could evaluate if a computer is intelligent enough to pass as a human being. The Turing Test, which was firstly introduced in a paper in 1950, involves a computer, a human interrogator, and a human interviewee. The conversations are made via a keyboard and a display screen. Even though the test’s development took place in the ’50s, only in 2014 was a computer able to pass the Turing test.

Turing’s work still prevails, and he is often remembered as THE founding father of AI. The A.M. Turing Award was created to honor his great work and is frequently referred to as the computer science equivalent of the Nobel Prize.

Allen Newell (1927-1992) & Herbert A. Simon (1916-2001)

Newell’s career spanned the entire computer boom era (which started in the 1950s). He became internationally known for his work with the theory of human cognition and computer software and hardware systems for complex information processing. Newell’s goal was to make the computer an effective tool for simulating human problem-solving. Most of the work he developed in the AI field was in collaboration with Herbert A. Simon, his lifelong partner in computer science.

Simon, who had a Ph.D. in political science, developed a research career on the nature of intelligence, with a particular focus in problem-solving and decision-making – perfectly aligning his knowledge to Newell’s goal. In the 1960s and afterward, Simon’s main research efforts were aimed at extending the boundaries of AI.

The two founding fathers of AI work came together in the 1950s. They founded what is most likely the world’s first hub dedicated to studying AI at Carnegie Mellon University (CMU) and propelled the Pittsburgh institution’s name internationally. The work developed at CMU’s lab included the General Problem Solver, a machine built to work as a universal problem solver via the mean-eds analysis technique.

In 1956, Claude Shannon, John McCarthy, and Marvin Minsky organized an event on the “artificial intelligence” subject – an avant-garde term made up by McCarthy for the conference. Newell and Simon had the opportunity to show the participants their Logic Theorist – a computer program deliberately engineered to perform automated reasoning. The system is coined the first artificial intelligence program. It established the field of heuristic programming and proved 38 of the first 52 theorems of the Principia Mathematica. Although it was in the forefront for its time, the program received an unenthusiastic reception by the participants.

The pioneering AI duo combinedly received dozens of awards, but the most significant one was the A.M. Turing Award in 1975.

John McCarthy (1927-2011)

McCarthy earned the A.M. Turing Award four years before Simon and Newell. He extensively contributed to some of the world’s most transformative technologies, such as the Internet, robots, and programming languages.

So much so that he invented the List Processing Language, or Lisp, the programming language that became the standard tool for artificial intelligence research and design. In 1959 he came up with a technique in which pieces of computer code that are not needed by a running computation are automatically removed from the computer’s random access memory (RAM) – the combination of this technique with Lisp is now routinely used in many programming languages.

In 1964 he followed Newell’s and Simon’s steps of bringing AI to academia and became the founding director of the Standford Artificial Intelligence Laboratory (SAIL). This research center was one of the most prominent places for the development of technology during the 1960s and 1970s and played a crucial part in machine-vision natural language and robotics. While at SAIL, McCarthy published numerous articles about science fiction and future technologies by predicting AI’s accomplishments. He expected that the capability to operate genetic code would be among the significant scientific developments in the 21st century.

As stated above, he was the principal organizer of the first conference on “artificial intelligence” (where Newell and Simon introduced the Logic Theorist). From that point forward, the term stuck in the community.

Read More: Quidgest’s Genio is about 10x more productive | Low Code I QUIDGEST

Marvin Minsky (1927-2016)

Minsky was a firm believer that the human mind was no different from a computer. Such belief made him focus on engineering intelligent machines. His most significant impact on AI – which shaped the field forever – comes from his insights into human intelligence. As stated in his MIT biography page, Minsky’s work was driven by the concept of “imparting to machines the human capacity for commonsense reasoning”.

His passion and goal to endow machines with intelligence eventually drove him to be the first electronic learning system creator. Dubbed SNARC (short for Stochastic Neural-Analog Reinforcement Computer), the project would become the original neural network simulator.

As the fear and the alarmist warning about the dangers of AI began to grow, with science-fiction novelists depicting machines with enhanced capabilities that could overtake us, Minsk took a positive view of a near-future when machines could be able to think. Although this seemed to spread fear among the critics, he believed that AI could eventually solve some of humanity’s most pressing problems.

Like some of the other founding fathers, he also took an important role in bridging AI early researchers and education institutions, being one of MIT’s Artificial Intelligence Lab cofounders. He was also awarded the A.M. Turing Award in 1969.

Quidgest is changing software development productivity by using artificial intelligence to automate 98% of the code present in a solution. A single instance is capable of writing 160 million lines of code per day. Learn more about Quidgest’s technology here.